In this context, XARA – an AI based conversation training app – was developed by afca.ag on behalf of the University St.Gallen. The training consists of three modules across three conversation topics – recruiting, conflict, and quitting – and is conducted in two virtual extended environments: an immersive virtual environment (IVE, using VR headsets) and a low-immersive virtual environment (L-IVE, using a standard computer setup). The training sessions are identical in interface and user experience design across both environments and allow participants to engage in adaptive learning conversations with AI-based avatars.

Can conversational competence be effectively trained in virtual extended environments? In this research project we compare immersive and low-immersive virtual environments for an AI-based conversation training.

Face-to-face conversation plays a critical role in professional communication, especially in emotionally charged and demanding situations such as recruitment, conflict resolution, or resignation discussions. Yet in everyday work life, opportunities to practice these conversations in a structured and supportive setting are rare. With technological advances in the quality of virtual reality (VR) and the rise of artificial intelligence (AI), new forms of adaptive training become possible. These advances enable realistic learning scenarios with AI avatars that no longer rely on complex pre-programming but instead respond based on behavioral and content prompts. This approach promises to enable new learning experiences through the simulation of realistic interactions and conversations. AI avatars in virtual environments can be particularly valuable in situations where learning conversation skills, such as human resources professionals and managers trainings, is crucial.

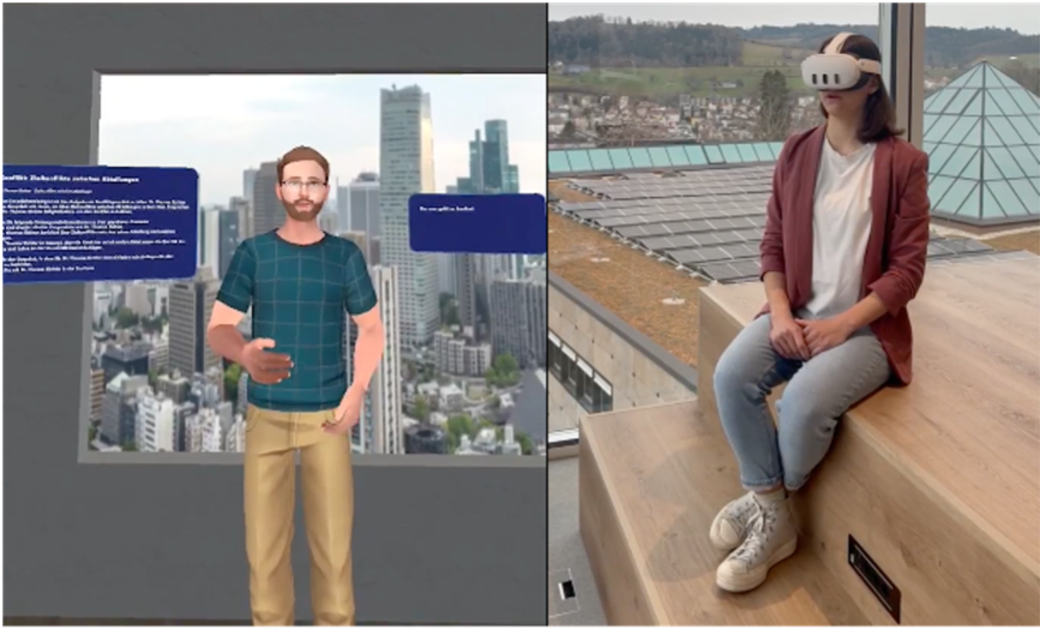

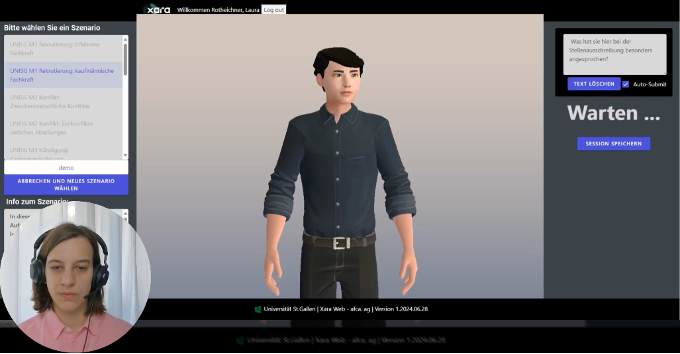

IVE

L-IVE

The illustration shows conversation training with the XARA app in the two environments IVE (top) and L-IVE (bottom). A VR headset is used for training in IVE and a computer screen with an internal or external microphone and speaker is used for training in L-IVE.

All participants complete the training in both IVE and L-IVE. Conversational competence is assessed repeatedly: at the beginning of the study and after each conversation training. This experimental design allows us to compare conversational competence across the two levels of immersion and to evaluate the training’s ecological validity. Measuring ecological validity is crucial to understanding whether these technologies create psychologically meaningful and transferable social learning experiences.